Welcome to the release of Langflow 1.6! We’re always working to improve Langflow for you and with this release we have:

- Added OAuth authentication for MCP servers, powered by MCP Composer

- Added an OpenAI responses API compliant endpoint for running flows, to make it easier to integrate Langflow with your applications

- Added advanced document parsing, powered by Docling, into the File component

- Integrated Traceloop to give you more options for observability

- Made UI improvements to make it easier for you to build your flows

Let’s look at what this means in detail.

💡 For a video rundown of the new features, check out the Langflow 1.6.0 launch video

OAuth for MCP servers

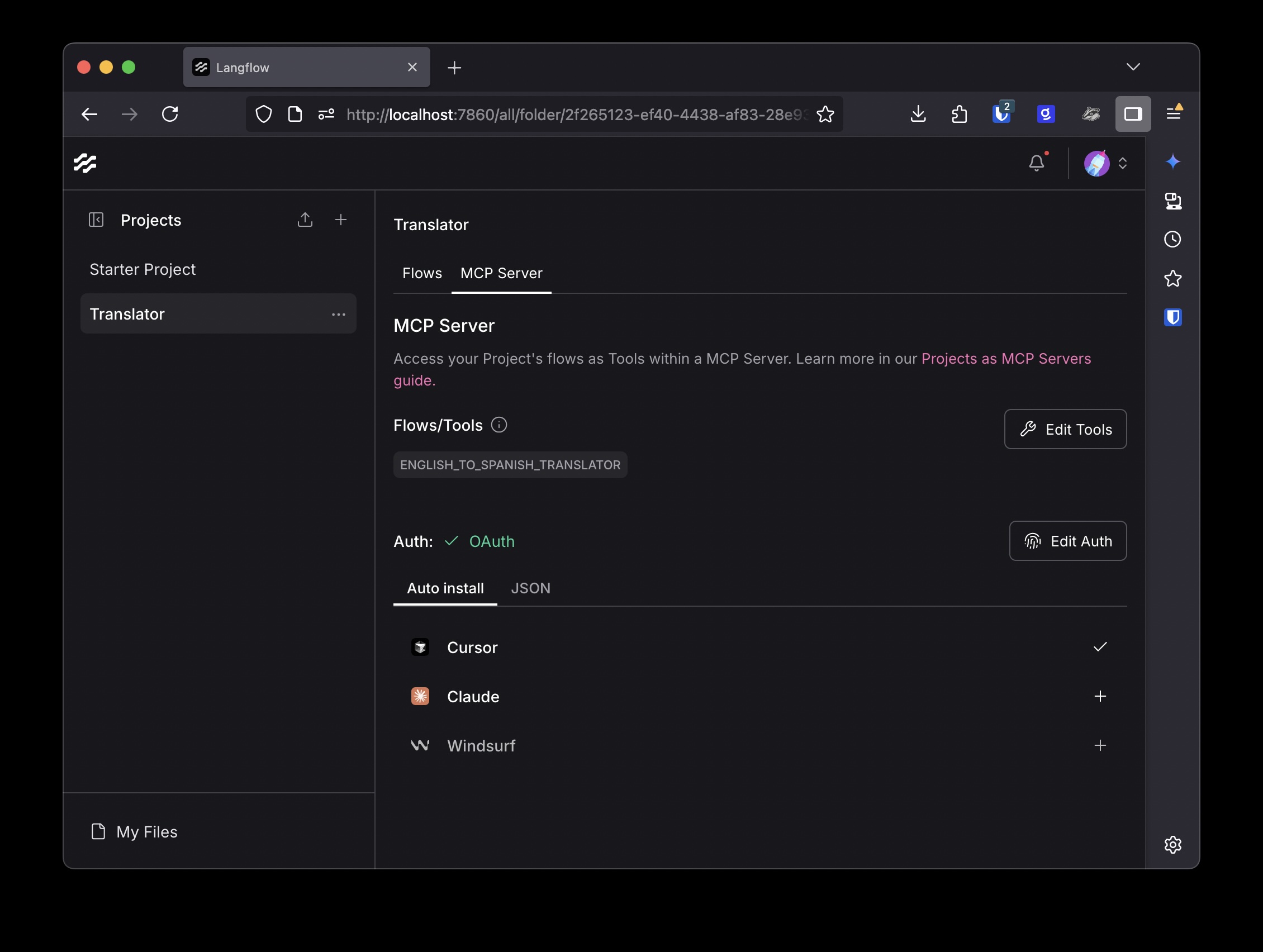

Every Langflow project can be exposed as an MCP server in which each flow is available as a tool. This is a great way to build custom tools for you or your business’s needs. Starting in Langflow 1.6 you can protect the MCP server with OAuth, requiring users to authenticate. This uses the MCP Composer project to provide the authorization.

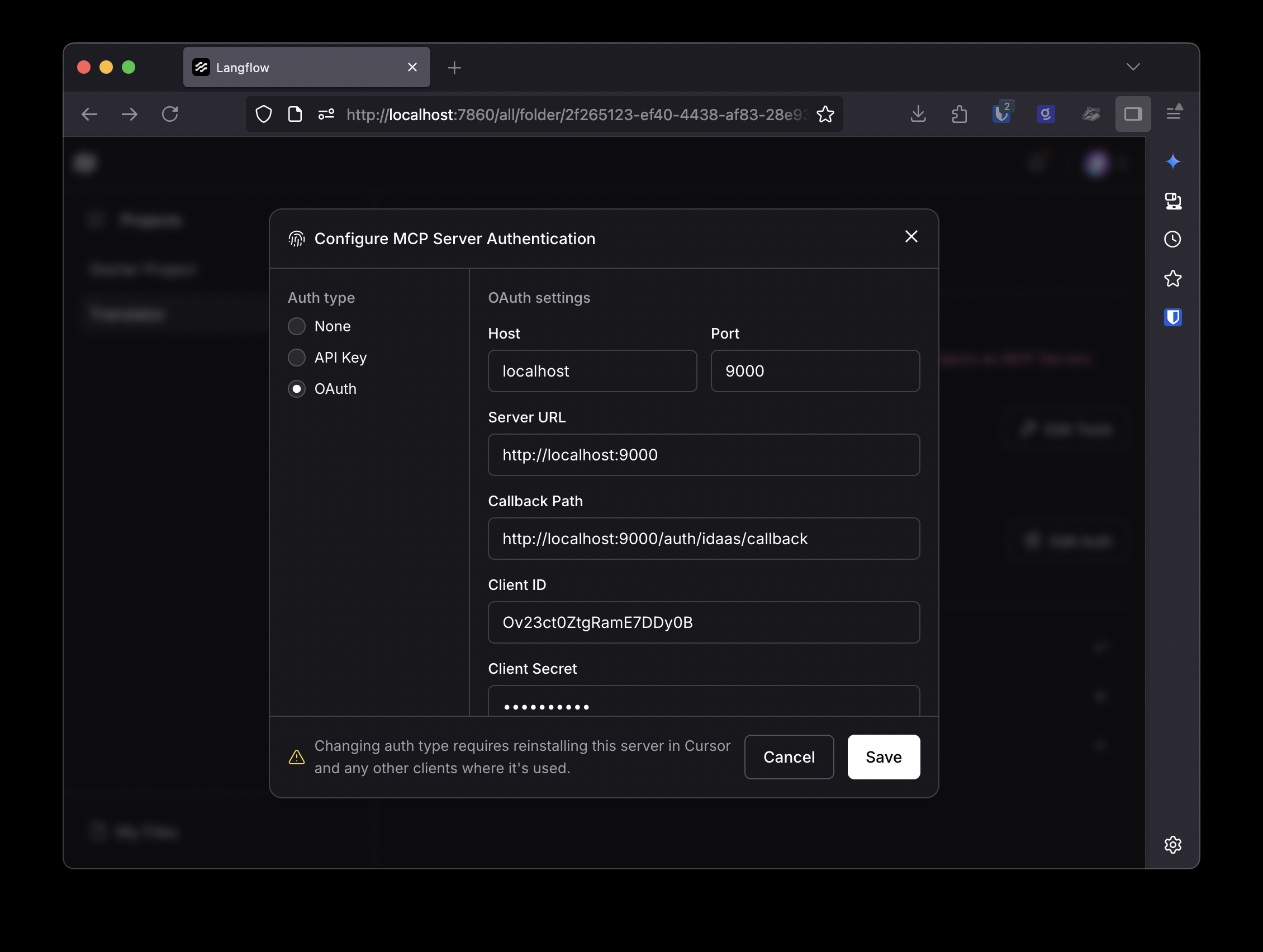

You can enable this protection by opening the MCP Server tab for your project and clicking on Edit Auth. Here you can select between no auth, API key or OAuth. To configure the OAuth settings, you will need an OAuth provider ready to go. Then you will need to register a new client for your OAuth provider and get a client ID and client secret.

Once you’ve done that you can fill in the details. You need to provide a host and port for an instance of MCP Composer to run on locally. You can then provide a server URL and callback URL which is how you will access the server externally. This can be localhost, or a domain or IP address if you are deploying this remotely.

The client ID, client secret, authorization URL and token URL are all provided by your OAuth provider. The MCP scope must be “user” and the provider scope must be “openid”.

Once you’ve saved this config, the MCP Composer server will start up and as soon as you get a green check mark you can then add the server to your MCP host application, like Claude, Cursor, or Windsurf. Then, when you try to access the server from the host, you will be taken through an OAuth flow in your browser before you can use the tools. Now you can control access to tools via your OAuth provider.

💡 For a more in-depth look at this feature, check out David’s video that shows you how to configure and use OAuth for MCP servers in Langflow.

OpenAI Responses API Compatibility

Langflow already has an API from which you can trigger flows to run and get the results. But we also recognize that the OpenAI API is the most well-known API for AI development. For that reason, we have made a new API endpoint that is compatible with the OpenAI Responses API.

This means, that when you want to run a flow from the API, you can make a request to `/api/v1/responses` passing the flow ID as the model and your input, and you will get back a response that matches the Responses API. For example, in Python you could do this:

import requests

import json

r = requests.post(

"http://localhost:7861/api/v1/responses",

data=json.dumps(

{

"model": YOUR_FLOW_ID,

"input": "Hello, how are you?",

}

),

headers={"Content-Type": "application/json"},

)

print(r.json()["output"][0]["content"][0]["text"])

You can also use the OpenAI API libraries. Although there is one quirk right now. If you need to pass an API key, you need to do that as an additional header, and you must also pass a dummy API key to the library. Like this:

from openai import OpenAI

client = OpenAI(

base_url="http://localhost:7861/api/v1/",

default_headers={ "x-api-key": "sk-0e3..." },

api_key=":-P",

)

response = client.responses.create(

model= YOUR_FLOW_ID,

input= "Hello, how are you?",

)

print(response.output_text)

For more details, check out the documentation on the OpenAI compatibility.

Upgraded File component

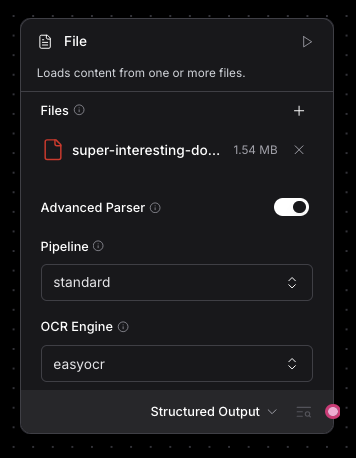

In Langflow 1.5 we introduced the Docling bundle. Since file processing is such a key part of any RAG style application, we wanted to make the advanced document parsing capabilities of Docling more easily available. In Langflow 1.6 you will find an Advanced Parser setting in the File component that upgrades it to use the Docling file parser.

Once you’ve added a file to the component, just check the Advanced Parser option and you can configure the parser to use either the standard or VLM pipeline, and the OCR engine you want to use.

In advanced mode, you can choose to output either structured output or markdown.

In Langflow 1.5 the Docling dependency wasn’t included, and you needed to install it yourself. Since it is such a core part of document processing, the Docling dependency is bundled in Langflow 1.6.

Powering your file ingestion with Docling will produce clean, structured data from messy PDFs and other documents.

More observability options

When deploying your agents to production, you need to keep an eye on them to ensure they are serving your users well. Monitoring their behavior is crucial to this, and in Langflow 1.6 Traceloop joins the list of available observability platforms that already includes Arize, Langfuse, LangSmith, LangWatch and Opik. Just set the right environment variables and your flows will start reporting to Traceloop.

UI Updates

We continue to iterate on the Langflow interface to make sure it works well for you. In Langflow 1.6 we’ve updated the components sidebar to separate core components, bundles, and MCP servers. You can always use the search bar to find any component or available MCP server, but it’s now easier to browse the core components and keep track of the MCP servers you have added to Langflow.

For more on all the changes that we’ve made, check out the release notes for version 1.6.0 and for all the detail, you can dig into the release on GitHub.

Join the community

We’re always working hard to make Langflow better for you, and we can’t do it without you, your support and your feedback. There are loads of ways to be part of the community, you can:

- join us in the Discord community to connect with fellow builders

- check out the GitHub repo to contribute or 🌟 the project

- Subscribe to the YouTube channel for tutorials on building with Langflow

- Subscribe to the AI++ newsletter for the latest articles about building with AI, agents and MCP

We cannot wait to see what you build with this new version, so download Langflow 1.6 and let us know what you think.