There’s nothing that gets the generative AI world as excited as a model launch, and last week OpenAI not only launched the open-source, Apache 2.0 licensed, gpt-oss model family but also the long awaited GPT-5. As developers, that actually gives us five new models: gpt-oss-20b, gpt-oss-120b, gpt-5, gpt-5-mini, and gpt-5-nano. If you want to experiment with these new models in Langflow, read on, or check out Melissa's video on using GPT-5 or gpt-oss-20b in Langflow on YouTube, to find out how.

gpt-oss on your machine

There are options for running the open-source models locally. If you happen to have an 80 GB GPU to hand, you should be able to run gpt-oss-120b. If that’s not available, as long as you have 16 GB of RAM you can run gpt-oss-20b.

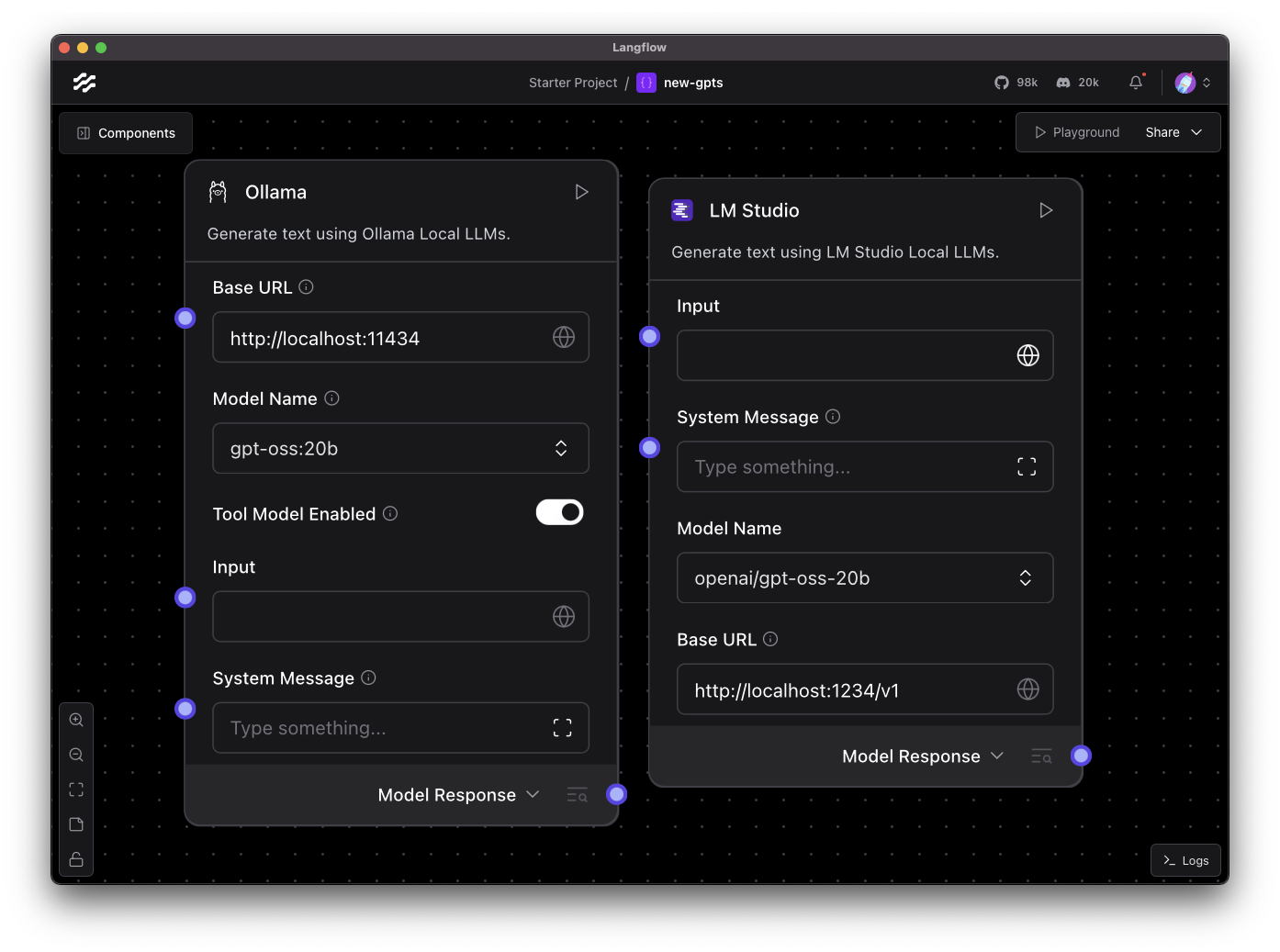

Within Langflow, you can run local models via the Ollama and LM Studio components and either of them can run the gpt-oss models for you. For Ollama, you can install the model with the command line instruction ollama pull oss-gpt-20b. In LM Studio, you can search for “gpt-oss" in the model search, or hit up the LM Studio model page for gpt-oss-20b, and install from there. Then make sure your service is running, drag an Ollama or LM Studio component onto the Langflow canvas and enter the base URL. The components will populate their model lists and you should see the new gpt-oss-20b ready to use.

gpt-oss as a service

There are plenty of services out there that run models for you, and many have jumped on the opportunity to make the gpt-oss models available. These are the services that are running the gpt-oss models and have Langflow components that you can use:

- IBM watsonx.ai (gpt-oss-120b)

- NVIDIA NIM (gpt-oss-20b, gpt-oss-120b)

- Groq (gpt-oss-20b, gpt-oss-120b)

- Azure AI Foundry (gpt-oss-20b, gpt-oss-120b)

- OpenRouter (gpt-oss-20b, gpt-oss-120b)

- Novita AI (gpt-oss-20b, gpt-oss-120b)

- AI/ML (gpt-oss-20b, gpt-oss-120b)

For most of these services you can find the component, drag it onto the canvas, and add your API key. This will trigger the model list to load, and you will find the new open models. Some services, like Azure AI Foundry require a bit more work, but once you’ve deployed the model you can use it through Langflow like any other.

GPT-5 in Langflow

You can also access OpenAI’s brand new GPT-5 models through Langflow. Though, as I write this, you will need to do a little editing to the OpenAI component (that is, until this pull request is merged and released).

To use GPT-5 in Langflow today you need to:

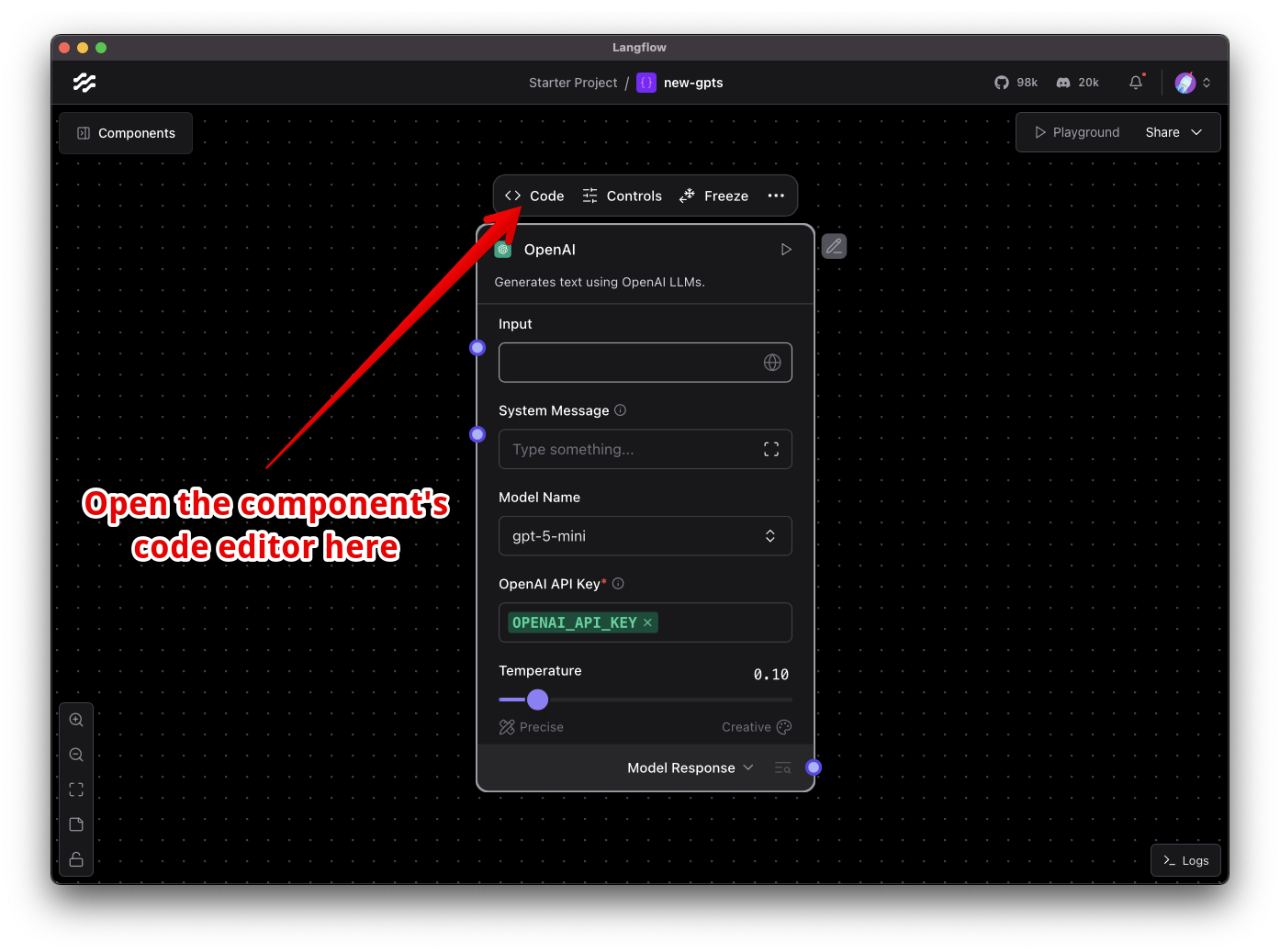

- Drag an OpenAI component onto the canvas

- Open the component’s code editor

The OpenAI component on the Langflow canvas. When you select the element, four buttons appear above the component, the first one is the one for the Code editor. - Add the following line after the imports and before the class definition:

OPENAI_REASONING_MODEL_NAMES = OPENAI_REASONING_MODEL_NAMES + ["gpt-5", "gpt-5-mini", "gpt-5-nano"]

The new model names will appear at the bottom of the model drop down ready for you to use.

Other GPT-5 services

You also can access GPT-5 through Azure AI Foundry, AI/ML API or OpenRouter and there are Langflow components for each.

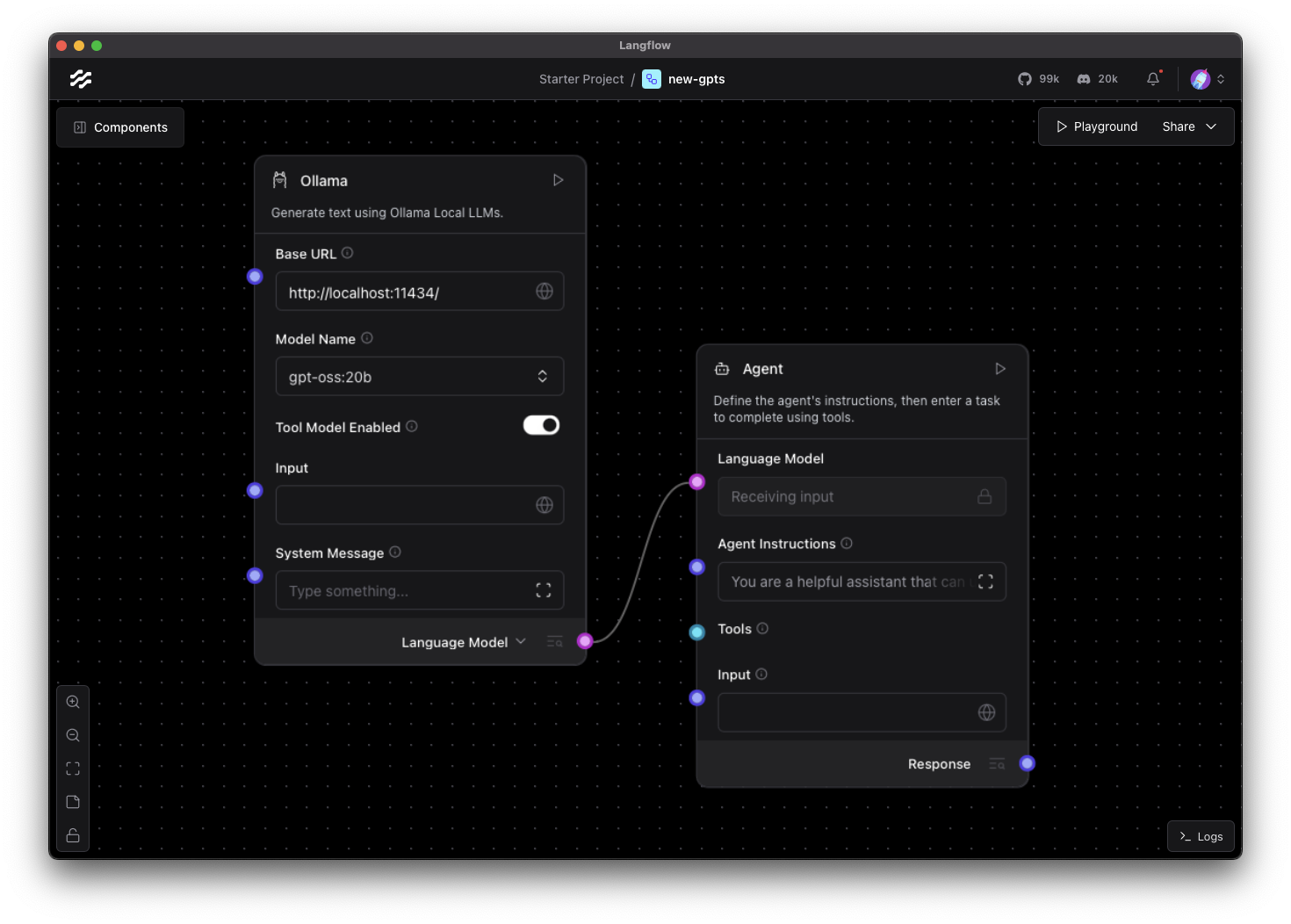

Using the models as agents

The announcements for both gpt-oss and GPT-5 praised the models for their excellent tool calling performance. This means that they should work well as agents. To use the models with the Langflow agent component you can set up the agent component to use a custom model. Then with whichever model component you use, set the output to Language model and connect the output to the agent component’s Language Model input. Then you can hook up your tools and MCP servers and try out the GPT-5 or gpt-oss models in your agentic workflows.

Build with the latest models in Langflow

Whatever you’re building with Langflow you can use the latest OpenAI models. From open-source models running on your own machine to GPT-5 in the cloud, you can plug them into your flows and start experimenting or evaluating.